Advanced Docker Commands / Getting to know important Docker commands

Docker containers are portable and lightweight environments that encapsulate an application and its dependencies, ensuring consistent runtime across platforms. They are instances of Docker images containing application code, runtime, libraries, and dependencies. Leveraging Linux</strong> kernel features like namespaces and groups, containers offer isolation and security, preventing conflicts and resource contention. Their scalability makes them suitable for microservices architectures and cloud-native applications, emphasizing their versatility and utility in modern software development.

Contents

Introduction to Docker Containers

Docker containers revolutionize the way applications are packaged, distributed, and deployed. They enable developers to build, ship, and run applications consistently across different environments, from development to production. At their core, Docker containers leverage containerization technology to encapsulate an application and its dependencies into a lightweight, portable unit.

One key advantage of Docker containers is their efficiency. Unlike traditional virtual machines requiring a separate operating system for each instance, Docker containers share the host system’s kernel and resources. This results in faster startup times and reduced resource overhead, making Docker containers ideal for deploying microservices and containerized applications at scale.

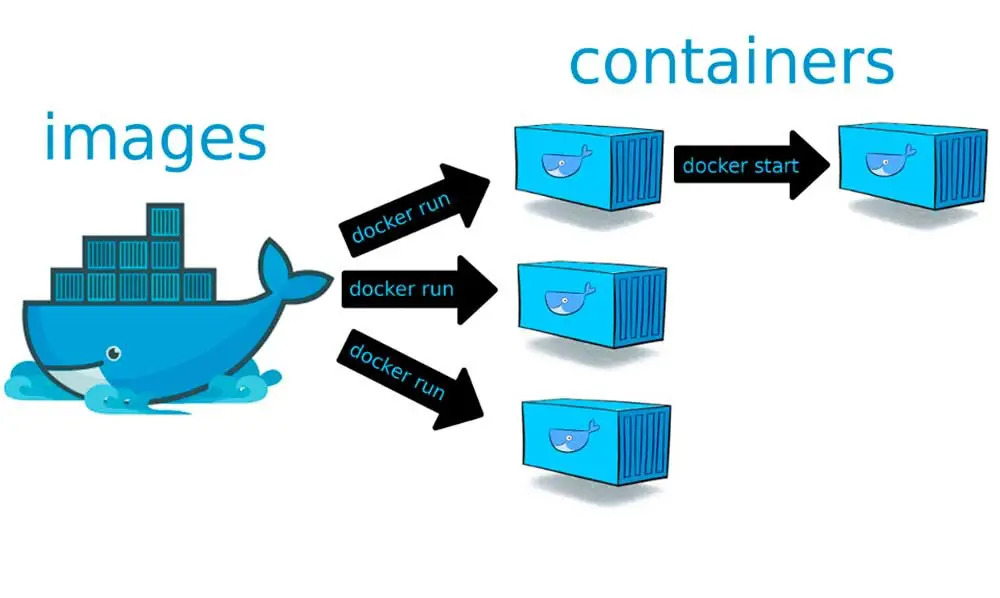

Creating Containers with Docker Run Command

The `docker run` command is the primary way to create and run Docker containers. It allows users to specify various options and parameters to customize the container’s behaviour. To create a container with the `docker run` command, you need to provide the name of the Docker image you want to use as the base for the container.

Additionally, you can specify options such as port mappings, volume mounts, environment variables, and resource constraints to configure the container according to your requirements. Once the container is created, the `docker run` command starts and attaches to its standard input, output, and error streams, allowing you to interact with the container’s runtime environment.

Managing Container Lifecycle with Docker

Managing the lifecycle of Docker containers involves tasks such as starting, stopping, pausing, and deleting containers as needed. Docker provides a set of commands to perform these operations efficiently. For example, the `docker start` command starts one or more stopped containers, while the `docker stop` command gracefully stops running containers by sending them a SIGTERM signal.

Moreover, Docker allows users to inspect container logs, monitor resource usage, and execute commands inside running containers using the `docker logs`, `docker stats`, and `docker exec` commands, respectively. These management commands empower users to maintain and troubleshoot Docker containers effectively throughout their lifecycle.

Overview of Docker Images

Docker images serve as the blueprints for Docker containers, encapsulating the application code, runtime, libraries, and other dependencies. These images are built using a Dockerfile, which contains instructions for assembling the image layer by layer. Each layer represents a specific instruction in the Dockerfile, such as installing dependencies or copying files.

Pulling Images from Docker Hub

Docker Hub is a centralized repository that hosts a vast collection of Docker images, spanning various software applications, frameworks, and operating systems. To pull an image from Docker Hub, users can use the `docker pull` command followed by the name of the desired image. Docker automatically retrieves the latest version of the image from Docker Hub and stores it locally on the host system.

Building Custom Docker Images

Building custom Docker images allows users to tailor the containerized environment to their specific requirements. To create a custom Docker image, users need to define a Dockerfile that specifies the desired configuration, dependencies, and application code. Then, they can use the `docker build` command to build the image based on the instructions provided in the Dockerfile.

Pushing Images to Docker Hub

Once a custom Docker image is built, users can push it to Docker Hub to share it with others or to use it across different environments. To push an image to Docker Hub, users need to tag the image with their Docker Hub username and repository name using the `docker tag` command. Then, they can use the `docker push` command to upload the image to their Docker Hub repository.

Docker Networking Basics

Networking in Docker allows containers to communicate with each other and the outside world. By default, Docker creates a bridge network for containers on a host, enabling them to communicate with each other using IP addresses. This internal communication is isolated from the host network, providing security and resource isolation.

Docker also supports other network modes, such as host mode and overlay mode, which offer different levels of networking flexibility and performance. Host mode allows containers to use the host network stack directly, while overlay mode enables communication between containers across multiple hosts in a Docker swarm.

Configuring Docker Networks

Configuring Docker networks involves defining network options such as subnet, gateway, and driver type to meet specific requirements. Docker provides a command-line interface and a declarative syntax to create and manage networks. Users can specify network options when creating a new network or update existing networks as needed.

Additionally, Docker supports advanced networking features such as multi-host networking, network policies, and service discovery through integration with external networking solutions like Kubernetes and Docker Swarm.

Connecting Containers with Docker Networks

Docker networks enable containers to communicate with each other using network aliases or service names. By connecting containers to the same network, they can communicate directly without exposing ports to the host network. This enhances security and simplifies container orchestration, as containers can discover and communicate with each other using DNS resolution or service discovery mechanisms provided by Docker.

Docker Volumes: Persistent Data Storage

Docker volumes are a powerful feature for managing persistent data storage in Docker containers. Unlike container filesystems, which are ephemeral and reset when the container is deleted, volumes provide a way to store and share data between containers and the host system. They ensure that data persists even when containers are stopped or removed.

Utilizing Docker volumes involves creating volume objects and mounting them into containers at runtime. This allows containers to read from and write to the volume, enabling data persistence across container restarts and updates. Docker volumes support various storage drivers, including local, remote, and cloud-based storage solutions, providing flexibility and scalability for different use cases.

Utilizing Docker Bind Mounts

Docker bind mounts offer another method for managing data in Docker containers by directly mounting host directories or files into container filesystems. This allows containers to access and modify host files and directories without copying them into the container. Bind mounts are particularly useful for sharing configuration files, logs, and other resources between the host and containers.

Managing data in Docker containers using bind mounts involves specifying the host directory or file path and the corresponding mount point within the container. This configuration allows containers to access the data as if it were part of their filesystem, simplifying data management and improving performance by avoiding unnecessary data duplication.

Managing Data in Docker Containers

Managing data within Docker containers is essential for ensuring data integrity, availability, and performance. Docker provides several mechanisms for storing and accessing data, including volumes and bind mounts. These features enable containers to persist data across container lifecycles, share data between containers and the host system, and access host resources efficiently. By understanding and leveraging Docker’s storage management capabilities, users can effectively manage data in their containerized environments and build robust, scalable applications.

Docker Security Best Practices

When it comes to security in Docker, following best practices is paramount to safeguarding your containerized applications. One crucial practice is to regularly update Docker and its dependencies to patch any known vulnerabilities. Additionally, it’s essential to implement least privilege principles by restricting container permissions and limiting access to sensitive resources.

Furthermore, employing image scanning tools to analyze Docker images for security vulnerabilities before deployment helps mitigate potential risks. Another recommended practice is to implement network segmentation to isolate containers and minimize the impact of a potential breach. By adopting these best practices, organizations can enhance the security posture of their Docker environments and protect against cyber threats effectively.

Securing Docker Containers and Images

Securing Docker containers and images involves implementing various measures to mitigate security risks. One approach is to employ Docker’s built-in security features, such as container isolation using namespaces and control groups. Additionally, implementing resource constraints and utilizing Docker’s security profiles, such as AppArmor and SELinux, enhances container security by restricting system access.

Furthermore, practicing container image hygiene by regularly scanning and verifying the integrity of images before deployment helps prevent the use of compromised or malicious images. Employing container runtime security tools and monitoring solutions enables real-time threat detection and response, enhancing the overall Docker security posture. By prioritizing container security measures, organizations can minimize the risk of security breaches and protect sensitive data effectively.

Docker Content Trust and Image Signing

Docker Content Trust (DCT) and image signing provide mechanisms for ensuring the integrity and authenticity of Docker images. DCT enables image publishers to digitally sign their images using cryptographic keys, verifying their authenticity during image pull operations. This prevents the use of tampered or malicious images, safeguarding against supply chain attacks.

conclusion

mastering Docker commands and understanding its various features and functionalities are essential for developers and DevOps professionals aiming to streamline application development and deployment processes. By delving into advanced Docker commands, such as networking, storage management, and security practices, users can optimize their Docker environments for efficiency, scalability, and security. Embracing Docker’s versatility and adopting best practices empowers organizations to leverage containerization effectively, facilitating agile development practices and enhancing overall productivity in the modern software development landscape.